تاريخ الرياضيات

تاريخ الرياضيات

الرياضيات في الحضارات المختلفة

الرياضيات في الحضارات المختلفة

الرياضيات المتقطعة

الرياضيات المتقطعة

الجبر

الجبر

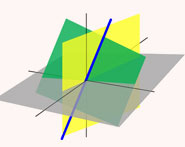

الهندسة

الهندسة

المعادلات التفاضلية و التكاملية

المعادلات التفاضلية و التكاملية

التحليل

التحليل

علماء الرياضيات

علماء الرياضيات |

Read More

Date: 10-7-2016

Date: 6-10-2016

Date: 16-10-2016

|

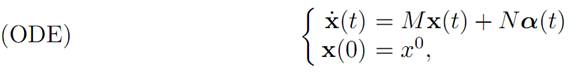

Consider the linear system of ODE:

for given matrices M ∈ Mn×n and N ∈ Mn×m. We will again take A to be the cube [−1, 1] m ⊂ Rm.

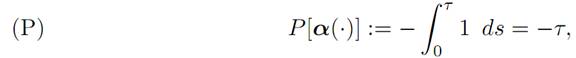

Define next

where τ = τ (α(.)) denotes the first time the solution of our ODE (1.1) hits the origin 0. (If the trajectory never hits 0, we set τ = ∞.)

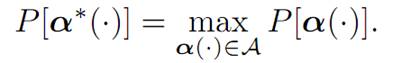

OPTIMAL TIME PROBLEM: We are given the starting point x0 ∈ Rn, and want to find an optimal control α∗(.) such that

Then τ∗= −P[α∗ (.)] is the minimum time to steer to the origin.

THEOREM 1.1 (EXISTENCE OF TIME-OPTIMAL CONTROL). Let x0 ∈ Rn. Then there exists an optimal bang-bang control α∗(.).

Proof. Let τ ∗ := inf{t | x0 ∈ C(t)}. We want to show that x0 ∈ C(τ ∗); that is, there exists an optimal control α∗(.) steering x0 to 0 at time τ ∗.

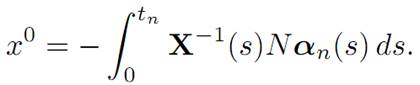

Choose t1 ≥ t2 ≥ t3 ≥ . . . so that x0 ∈ C(tn) and tn → τ∗. Since x0 ∈ C(tn), there exists a control αn(.) ∈ A such that

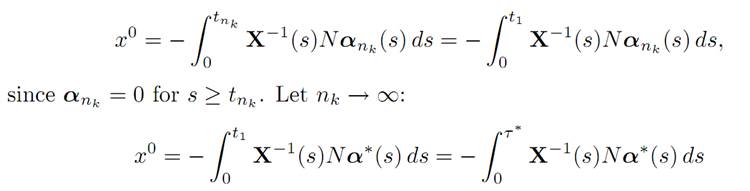

If necessary, redefine αn(s) to be 0 for tn ≤ s. By Alaoglu’s Theorem, there exists a subsequence nk → ∞ and a control α∗(.) so that αn∗⇀ α∗ .

We assert that α∗(.) is an optimal control. It is easy to check that α∗(s) = 0, s ≥ τ∗. Also

because α∗(s) = 0 for s ≥ τ∗. Hence x0 ∈ C(τ ∗), and therefore α∗(.) is optimal.

According to Theorem (EXTREMALITY AND BANG-BANG PRINCIPLE) there in fact exists an optimal bang-bang control.

References

[B-CD] M. Bardi and I. Capuzzo-Dolcetta, Optimal Control and Viscosity Solutions of Hamilton-Jacobi-Bellman Equations, Birkhauser, 1997.

[B-J] N. Barron and R. Jensen, The Pontryagin maximum principle from dynamic programming and viscosity solutions to first-order partial differential equations, Transactions AMS 298 (1986), 635–641.

[C1] F. Clarke, Optimization and Nonsmooth Analysis, Wiley-Interscience, 1983.

[C2] F. Clarke, Methods of Dynamic and Nonsmooth Optimization, CBMS-NSF Regional Conference Series in Applied Mathematics, SIAM, 1989.

[Cr] B. D. Craven, Control and Optimization, Chapman & Hall, 1995.

[E] L. C. Evans, An Introduction to Stochastic Differential Equations, lecture notes avail-able at http://math.berkeley.edu/˜ evans/SDE.course.pdf.

[F-R] W. Fleming and R. Rishel, Deterministic and Stochastic Optimal Control, Springer, 1975.

[F-S] W. Fleming and M. Soner, Controlled Markov Processes and Viscosity Solutions, Springer, 1993.

[H] L. Hocking, Optimal Control: An Introduction to the Theory with Applications, OxfordUniversity Press, 1991.

[I] R. Isaacs, Differential Games: A mathematical theory with applications to warfare and pursuit, control and optimization, Wiley, 1965 (reprinted by Dover in 1999).

[K] G. Knowles, An Introduction to Applied Optimal Control, Academic Press, 1981.

[Kr] N. V. Krylov, Controlled Diffusion Processes, Springer, 1980.

[L-M] E. B. Lee and L. Markus, Foundations of Optimal Control Theory, Wiley, 1967.

[L] J. Lewin, Differential Games: Theory and methods for solving game problems with singular surfaces, Springer, 1994.

[M-S] J. Macki and A. Strauss, Introduction to Optimal Control Theory, Springer, 1982.

[O] B. K. Oksendal, Stochastic Differential Equations: An Introduction with Applications, 4th ed., Springer, 1995.

[O-W] G. Oster and E. O. Wilson, Caste and Ecology in Social Insects, Princeton UniversityPress.

[P-B-G-M] L. S. Pontryagin, V. G. Boltyanski, R. S. Gamkrelidze and E. F. Mishchenko, The Mathematical Theory of Optimal Processes, Interscience, 1962.

[T] William J. Terrell, Some fundamental control theory I: Controllability, observability, and duality, American Math Monthly 106 (1999), 705–719.

|

|

|

|

علامات بسيطة في جسدك قد تنذر بمرض "قاتل"

|

|

|

|

|

|

|

أول صور ثلاثية الأبعاد للغدة الزعترية البشرية

|

|

|

|

|

|

|

مدرسة دار العلم.. صرح علميّ متميز في كربلاء لنشر علوم أهل البيت (عليهم السلام)

|

|

|