تاريخ الرياضيات

تاريخ الرياضيات

الرياضيات في الحضارات المختلفة

الرياضيات في الحضارات المختلفة

الرياضيات المتقطعة

الرياضيات المتقطعة

الجبر

الجبر

الهندسة

الهندسة

المعادلات التفاضلية و التكاملية

المعادلات التفاضلية و التكاملية

التحليل

التحليل

علماء الرياضيات

علماء الرياضيات |

Read More

Date: 6-10-2021

Date: 26-9-2021

Date: 2-10-2021

|

The objective of global optimization is to find the globally best solution of (possibly nonlinear) models, in the (possible or known) presence of multiple local optima. Formally, global optimization seeks global solution(s) of a constrained optimization model. Nonlinear models are ubiquitous in many applications, e.g., in advanced engineering design, biotechnology, data analysis, environmental management, financial planning, process control, risk management, scientific modeling, and others. Their solution often requires a global search approach.

A few application examples include acoustics equipment design, cancer therapy planning, chemical process modeling, data analysis, classification and visualization, economic and financial forecasting, environmental risk assessment and management, industrial product design, laser equipment design, model fitting to data (calibration), optimization in numerical mathematics, optimal operation of "closed" (confidential) engineering or other systems, packing and other object arrangement problems, portfolio management, potential energy models in computational physics and chemistry, process control, robot design and manipulations, systems of nonlinear equations and inequalities, and waste water treatment systems management.

To formulate the problem of global optimization, assume that the objective function  and the constraints

and the constraints  are continuous functions, the component-wise bounds

are continuous functions, the component-wise bounds  and

and  related to the decision variable vector

related to the decision variable vector  are finite, and the feasible set

are finite, and the feasible set  is nonempty. These assumptions guarantee that the global optimization model is well-posed since, by the extreme value theorem, the solution set of the global optimization model is nonempty.

is nonempty. These assumptions guarantee that the global optimization model is well-posed since, by the extreme value theorem, the solution set of the global optimization model is nonempty.

As an example, consider the  -norm error function related to solving the pair of equations

-norm error function related to solving the pair of equations

|

|

|

(1) |

|

|

|

(2) |

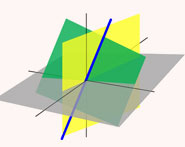

Since we wish to find the globally smallest error and not just a "locally smallest" one, we need to search globally in the two-dimensional box region (see the base area of the picture). There are great many nonlinear optimization problems that possess a similar multi-modal structure.

If we use traditional local scope search methods to solve this problem, then depending on the starting point of the search, we will often find locally optimal solutions of varying quality (cf. the "valleys" in the figure above that could easily trap local search methods). In order to find the globally optimal solution, a global scope search effort is needed.

Several of the most important global optimization strategies are listed below, together with short additional remarks and references. Most global optimization software implementations are based upon one of these approaches, possibly combining ideas from several strategies.

Exact methods include:

1. naive approaches: These include the most well known passive (simultaneous) or direct (not fully adaptive) sequential global optimization strategies such as uniform grid, space covering, and pure random searches. Note that these methods are convergent under mild assumptions but, as a rule, are not practical in higher-dimensional problems (Horst and Pardalos 1995, Pintér 1996a, Zhigljavsky 1991).

2. complete (enumerative) search strategies: These are based upon an exhaustive (and typically streamlined) enumeration of all possible solutions. These are applicable to combinatorial problems, as well as to certain "well-structured" continuous global optimization problems such as concave programming (Horst and Tuy 1996).

3. homotopy (parameter continuation), trajectory methods, and related approaches: These methods have the "ambitious" objective of visiting all stationary points of the objective function: this, in turn, leads to the list of all (global as well as local) optima. This general approach includes differential equation model based, path-following search strategies, as well as fixed-point methods and pivoting algorithms (Diener 1995, Forster 1995).

4. successive approximation (relaxation) methods: In this approach, the initial optimization problem is replaced by a sequence of relaxed sub-problems that are easier to solve. Successive refinement of the sub-problems to approximate the original problem is done e.g., by cutting planes and by more general cuts, diverse minorant function constructions, nested optimization and decomposition strategies and so on. These approaches are applicable to diverse structured global optimization problems such as concave minimization and differential convex problems (Horst and Tuy 1996).

5. branch and bound algorithms: A variety of adaptive partition strategies have been proposed to solve global optimization models. These are based upon partition, sampling, and subsequent lower and upper bounding procedures: these operations are applied iteratively to the collection of active ("candidate") subsets within the feasible set  . Their exhaustive search feature is guaranteed in similar spirit to the analogous integer linear programming methodology. Branch and bound subsumes many specific approaches, and allows for a variety of implementations. Branch and bound methods typically rely on some a priori structural knowledge about the problem. This information may relate, for instance to how rapidly each function can vary (e.g., the knowledge of a suitable "overall" Lipschitz constant, for each function

. Their exhaustive search feature is guaranteed in similar spirit to the analogous integer linear programming methodology. Branch and bound subsumes many specific approaches, and allows for a variety of implementations. Branch and bound methods typically rely on some a priori structural knowledge about the problem. This information may relate, for instance to how rapidly each function can vary (e.g., the knowledge of a suitable "overall" Lipschitz constant, for each function  and

and  ); or to the availability of an analytic formulation and guaranteed smoothness of all functions (for instance, in interval arithmetic-based methods). The general branch and bound methodology is applicable to broad classes of global optimization problems, e.g., in combinatorial optimization, concave minimization, reverse convex programs, DC programming, and Lipschitz optimization (Neumaier 1990, Hansen 1992, Ratschek and Rokne 1995, Kearfott 1996, Horst and Tuy 1996, Pintér 1996a).

); or to the availability of an analytic formulation and guaranteed smoothness of all functions (for instance, in interval arithmetic-based methods). The general branch and bound methodology is applicable to broad classes of global optimization problems, e.g., in combinatorial optimization, concave minimization, reverse convex programs, DC programming, and Lipschitz optimization (Neumaier 1990, Hansen 1992, Ratschek and Rokne 1995, Kearfott 1996, Horst and Tuy 1996, Pintér 1996a).

6. Bayesian search (partition) algorithms: These methods are based upon postulated statistical information, to enable a prior stochastic description of the function-class modeled. During optimization, the problem-instance characteristics are adaptively estimated and updated. Note that, as a rule, only the corresponding one-dimensional model development is exact; furthermore, that in most practical cases "myopic" approximate decisions govern the search procedure. This general approach is applicable also to (merely) continuous global optimization problems. Theoretically, convergence to the optimal solution set is guaranteed only by generating an everywhere dense set of search points. One of the obvious challenges of using statistical methods is the choice and verification of an "appropriate" statistical model, for the class of problems to which they are applied. Additionally, it seems to be difficult to implement rigorous, computationally efficient versions of these algorithms for higher dimensional optimization problems. Note, however, that if one "skips" the underlying Bayesian paradigm, then these methods can also be pragmatically viewed as adaptive partition algorithms, and, as such, they can be directly extended to higher dimensions (Pintér 1996a). For detailed expositions on Bayesian approaches, see Mockus (1989), Törn and Zilinskas (1989), and Mockus et al. (1996).

7. adaptive stochastic search algorithms: This is another broad class of methods, based upon "exhaustive" random sampling in the feasible set. In its basic form, it includes various random search strategies that are convergent, with probability one. Search strategy adjustments, clustering and deterministic solution refinement options, statistical stopping rules, etc. can also be added as enhancements. The methodology is applicable to both discrete and continuous global optimization problems under very mild conditions (Boender and Romeijn 1995, Pintér 1996a, Zhigljavsky 1991).

Heuristic strategies include:

1. "Globalized" extensions of local search methods: These are partially heuristic algorithms that are nonetheless often successful in practice. The essential idea is to apply a preliminary grid search or random search based global phase, followed by applying a local (convex programming) method. For instance, random multistart performs a local search from several points selected randomly from the search domain  . Note that even such sampling is not trivial, when

. Note that even such sampling is not trivial, when  has a complicated shape, as being defined, e.g., by (Lipschitz-)continuous nonlinear functions. Frequently, sophisticated algorithm enhancements are added to this basic strategy. For instance, the clustering of sample points attempts to select only a single point from each sampled "basin" of f from which then a local search method is initiated. (Törn and Zilinskas 1989).

has a complicated shape, as being defined, e.g., by (Lipschitz-)continuous nonlinear functions. Frequently, sophisticated algorithm enhancements are added to this basic strategy. For instance, the clustering of sample points attempts to select only a single point from each sampled "basin" of f from which then a local search method is initiated. (Törn and Zilinskas 1989).

2. evolution strategies: These methods heuristically "mimic" biological evolution: namely, the process of natural selection and the "survival of the fittest" principle. An adaptive search procedure based on a "population" of candidate solution points is used. Iterations involve a competitive selection that drops the poorer solutions. The remaining pool of candidates with higher "fitness value" are then "recombined" with other solutions by swapping components with another; they can also be "mutated" by making some smaller-scale change to a candidate. The recombination and mutation moves are applied sequentially; their aim is to generate new solutions that are biased towards subsets of  in which good, although not necessarily globally optimized, solutions have already been found. Numerous variants of this general strategy based on diverse evolution "game rules" can be constructed. The different types of evolutionary search methods include approaches that are aimed at continuous global optimization problems, and also others that are targeted towards solving combinatorial problems. The latter group is often called genetic algorithms (Goldberg 1989, Michalewicz 1996, Osman and Kelly 1996, Voss et al. 1999).

in which good, although not necessarily globally optimized, solutions have already been found. Numerous variants of this general strategy based on diverse evolution "game rules" can be constructed. The different types of evolutionary search methods include approaches that are aimed at continuous global optimization problems, and also others that are targeted towards solving combinatorial problems. The latter group is often called genetic algorithms (Goldberg 1989, Michalewicz 1996, Osman and Kelly 1996, Voss et al. 1999).

3. simulated annealing: These techniques are based upon the physical analogy of cooling crystal structures that spontaneously attempt to arrive at some stable (globally or locally minimal potential energy) equilibrium. This general principle is applicable to both discrete and continuous global optimization problems under mild structural requirements (van Laarhoven and Aarts 1987, Osman and Kelly 1996, Aarts and Lenstra 1997).

4. tabu search: In this general category of meta-heuristics, the essential idea is to "forbid" search moves to points already visited in the (usually discrete) search space, at least for the upcoming few steps. That is, one can temporarily accept new inferior solutions, in order to avoid paths already investigated. This approach can lead to exploring new regions of D, with the goal of finding a solution by "globalized" search. Tabu search has traditionally been applied to combinatorial optimization (e.g., scheduling, routing, traveling salesman) problems. The technique can be made, at least in principle, directly applicable to continuous global optimization problems by a discrete approximation (encoding) of the problem, but other extensions are also possible (Glover and Laguna 1996, Osman and Kelly 1996, Voss et al. 1999).

5. approximate convex global underestimation: This heuristically attractive strategy attempts to estimate the (postulated) large scale, "overall" convexity characteristics of the objective function  based on directed sampling in

based on directed sampling in  . Applicable to smooth problems (Dill et al. 1997).

. Applicable to smooth problems (Dill et al. 1997).

6. continuation methods: These first transform the potential function into a more smooth ("simpler") function which has fewer local minimizers, and then use a local minimization procedure to trace the minimizers back to the original function. Again, this methodology is applicable to smooth problems. For theoretical background, see Forster (1995).

7. sequential improvement of local optima: These usually operate on adaptively constructed auxiliary functions, to assist the search for gradually better optima. The general heuristic principle includes tunneling, deflation, and filled function approaches (Levy and Gomez 1985).

Pintér (1996b) gave a survey on continuous global optimization software. At present, there are more than a hundred pieces of global optimization software. Global optimization is implemented in the Wolfram Language as NMinimize and NMaximize, and also via the MathOptimizer Professional add-on application package.

For further topical information, see Bliek et al. (2001), Pintér (2002), Fourer (2003), Mittelmann and Spelucci (2004), and Neumaier (2004).

REFERENCES:

Aarts, E. and Lenstra, J. K. (Eds.). Local Search in Combinatorial Optimization. Chichester, England: Wiley, 1997.

Benson, H. P. and Sun, E. "LGO: Versatile Tool for Global Optimization. OR/MS Today 27, 52-55, 2000. http://www.lionhrtpub.com/orms/orms-10-00/swr.html.

Bliek, Ch.; Spelucci, P.; Vicente, L. N.; Neumaier, A.; Granvilliers, L.; Monfroy, E.; Benhamou, F.; Huens, E.; Van Hentenryck, P.; Sam-Haroud, D.; and Faltings, B. "Algorithms for Solving Nonlinear Constrained and Optimization Problems: The State of the Art." COCONUT Project Report. 2001. http://www.mat.univie.ac.at/~neum/glopt/coconut/.

Boender, C. G. E. and Romeijn, E. "Stochastic Methods." In Handbook of Global Optimization: Nonconvex Optimization and Its Applications (Ed. Horst and Pardalos). Dordrecht, Netherlands: Kluwer, pp. 829-869, 1995.

Bomze, I. M.; Csendes, T.; Horst, R.; and Pardalos, P. M. (Eds.). Developments in Global Optimization. Dordrecht, Netherlands: Kluwer, 1997.

Diener, I. "Trajectory Methods in Global Optimization." In Handbook of Global Optimization: Nonconvex Optimization and Its Applications (Ed. Horst and Pardalos). Dordrecht, Netherlands: Kluwer pp. 649-668, 1995.

Dill, K. A.; Phillips, A. T.; and Rosen, J. B. "Molecular Structure Prediction by Global Optimization." In Developments in Global Optimization (Ed. I. M. Bomze, T. Csendes,R. Horst, and P. M. Pardalos). Dordrecht, Netherlands: Kluwer, pp. 217-234, 1997.

Floudas, C. A.; Pardalos, P. M.; Adjiman, C. S.; Esposito, W. R.; Gümüs, Z. H.; Harding, S. T.; Klepeis, J. L.; Meyer, C. A.; and Schweiger, C. A. Handbook of Test Problems in Local and Global Optimization. Dordrecht, Netherlands: Kluwer, 1999.

Forster, W. "Homotopy Methods." In Handbook of Global Optimization: Nonconvex Optimization and Its Applications (Ed. Horst and Pardalos). Dordrecht, Netherlands: Kluwer, pp. 669-750, 1995.

Fourer, R. "Nonlinear Programming Frequently Asked Questions." 2003. http://www-unix.mcs.anl.gov/otc/Guide/faq/nonlinear-programming-faq.html.

Glover, F. and Laguna, M. Tabu Search. Dordrecht, Netherlands: Kluwer, 1996.

Goldberg, D. E. Genetic Algorithms in Search, Optimization, and Machine Learning. Reading, MA: Addison-Wesley, 1989.

Gray, P.; Hart, W. E.; Painton, L.; Phillips, C.; Trahan, M.; and Wagner, J. "A Survey of Global Optimization Methods. http://www.cs.sandia.gov/opt/survey/main.html.

Hansen, E. R. Global Optimization Using Interval Analysis. New York: Dekker, 1992.

Horst, R. and Pardalos, P. M. (Eds.). Handbook of Global Optimization. Dordrecht, Netherlands: Kluwer, 1995.

Horst, R. and Tuy, H. Global Optimization: Deterministic Approaches, 3rd ed. Berlin: Springer-Verlag, 1996.

Kearfott, R. B. Rigorous Global Search: Continuous Problems. Dordrecht, Netherlands: Kluwer, 1996.

Levy, A. V. and Gomez, S. "The Tunneling Method Applied to Global Optimization." In Numerical Optimization 1984 (Ed. P. T. Boggs). Philadelphia: SIAM, pp. 213-244, 1985.

Michalewicz, Z. Genetic Algorithms + Data Structures = Evolution Programs, 3rd ed. Berlin: Springer-Verlag, 1996.

Mittelmann, H. D. and Spellucci, P. "Decision Tree for Optimization Software." 2004. http://plato.la.asu.edu/guide.html.

Mockus J. Bayesian Approach to Global Optimization. Dordrecht, Netherlands: Kluwer, 1989.

Mockus, J.; Eddy, W.; Mockus, A.; Mockus, L.; and Reklaitis, G. Bayesian Heuristic Approach to Discrete and Global Optimization. Dordrecht, Netherlands: Kluwer, 1996.

Neumaier, A. Interval Methods for Systems of Equations. Cambridge, England: Cambridge University Press, 1990.

Neumaier, A. "Global Optimization." 2004. http://www.mat.univie.ac.at/~neum/glopt/glopt.html.

Osman, I. H. and Kelly, J. P. (Eds.). Meta-Heuristics: Theory and Applications. Dordrecht, Netherlands: Kluwer, 1996.

Pintér, J. D. Global Optimization in Action. Dordrecht, Netherlands: Kluwer, 1996a.

Pintér, J. D. "Continuous Global Optimization Software: A Brief Review." Optima 52, 1-8, 1996b. http://plato.la.asu.edu/gom.html.

Pintér, J. D. "Global Optimization: Software, Test Problems, and Applications." Ch. 15 in Handbook of Global Optimization, Volume 2 (Ed. P. M. Pardalos and H. F. Romeijn). Dordrecht, Netherlands: Kluwer, pp. 515-569, 2002.

Pintér, J. D. "Advanced Software Products for Nonlinear Systems Optimization." 2004. http://www.pinterconsulting.com.

Ratschek, H. and Rokne, J. G. "Interval Methods." In Handbook of Global Optimization (Ed. R. Horst and P. M. Pardalos). Dordrecht, Netherlands: Kluwer, pp. 751-828, 1995.

Törn, A. and Žilinskas, A. Global Optimization. New York: Springer-Verlag, 1989.

Van Laarhoven, P. J. M. and Aarts, E. H. L. Simulated Annealing: Theory and Applications. Dordrecht, Netherlands: Kluwer, 1987.

Voss, S.; Martello, S.; Osman, I. H.; and Roucairol, C. (Eds.). Meta-Heuristics: Advances and Trends in Local Search Paradigms for Optimization. Dordrecht, Netherlands: Kluwer, 1999.

Zhigljavsky, A. A. Theory of Global Random Search. Dordrecht, Netherlands: Kluwer, 1991.

|

|

|

|

مخاطر عدم علاج ارتفاع ضغط الدم

|

|

|

|

|

|

|

اختراق جديد في علاج سرطان البروستات العدواني

|

|

|

|

|

|

|

مدرسة دار العلم.. صرح علميّ متميز في كربلاء لنشر علوم أهل البيت (عليهم السلام)

|

|

|