تاريخ الرياضيات

تاريخ الرياضيات

الرياضيات في الحضارات المختلفة

الرياضيات في الحضارات المختلفة

الرياضيات المتقطعة

الرياضيات المتقطعة

الجبر

الجبر

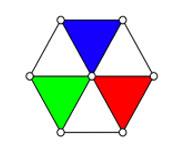

الهندسة

الهندسة

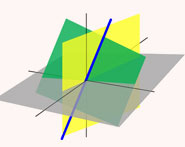

المعادلات التفاضلية و التكاملية

المعادلات التفاضلية و التكاملية

التحليل

التحليل

علماء الرياضيات

علماء الرياضيات |

Read More

Date: 11-10-2015

Date: 11-10-2015

Date: 11-10-2015

|

What was a real number at the end of the 19th century? An intuitive, geometrical or physical quantity, or a ratio of such quantities? An aggregate of things identical in thought? A creation of the human mind? An arbitrary sign subjected to certain rules? A purely logical concept? Nobody was able to decide this with certainty. Only one thing was beyond doubt: there was no consensus of any kind.

Were the real numbers consistent? Would an inconsistency appear one day and much of the mathematical building come tumbling down? Some of the intuitive difficulties that began to be felt revolved around the fact that the real numbers were not countable, that is, they could not be put in 1-1 correspondence with the natural numbers. Cantor proved that the real numbers were not countable in 1874. He produced his famous "diagonal argument" in 1890 which gave a second, more striking, proof that the real numbers were not countable. To do this he assumed that the real numbers were countable, that is they could be listed in order. Suppose that this list is

L = {n1 , n2 , n3 , n4 , ... }

and let d(i, j) be the i-th digit of nj . Define the real number r to have k-th digit 1 if d(k, k) = 2 and r to have k-th digit 2 if d(k, k) ≠ 2. Then the real number r is not in the list L since if it were then it would be nt for some t. But the t-th digit of r differs from the t-th digit of nt by construction, so we have a contradiction. Hence the real numbers are not countable.

We'll construct a certain real number which, although historically not one that was looked at, will let us understand some of the questions that arose. Let us start with the 100 two digit numbers. A simple code will let us translate these into letters, 00 become a, 01 become b, ... , 25 becomes z, 26 becomes A, 27 becomes B, ... , 51 becomes Z, then code all the punctuation marks, and then make all the remaining numbers up to 99 translate to an empty space. Now create a number, say c, starting from the 100 2-blocks.

c = 0.01020304050607080910111213141516171819202122232425...

Then continue with the 10000 pairs of 2-blocks 0000, 0001, 0002, ..., 0099, 0100, 0101, ...

Then the 1000000 triples of 2-blocks etc. We can represent c as a point on a line segment of length 1. Yet every English sentence ever written or ever to be written, occurs in the decoding of c into letters. For example "one third" has 9 characters so will be decoded from c around 1018 digits after the decimal point. This article is there, both with the misprints which inevitably occur and a corrected version is there (but one has to go rather a long way to the right of the decimal point to find it!). The whole of Shakespeare is there, as is every book yet to be written, etc!

Let us use c to describe a paradox which was discovered in 1905. The first thing to notice is that all descriptions of real numbers in English (let us forget about words in other languages) must appear in c, since every possible sentence occurs in c. For example, "one third" will occur as we have noted, as will "the base of natural logs", and "the ratio of the circumference of a circle to its diameter", etc. This will enable us to explain Richard's paradox, discovered by Jules Richard in 1905. There are only a countable number of such descriptions of real numbers in English so all real numbers (except a tiny countable subset) can never be described in English. However, this is not the paradox. Richard obtained that by using Cantor's diagonal argument. Create a real number r, say, as follows:

If the n-th block of c translates into a description of a real number r(n) then set the n-th digit of r to be different to the n-th digit of r(n). If the n-th block of c does not describe a real number (most of course will not even be meaningful in English) then set the n-th digit of r(n) to be 1.

Now the real number r cannot be described in English, since it differs by construction from every real number which can be described in English. That is a bit worrying. Even worse, of course, is the fact that we have just described r in English in the previous paragraph! If Richard's paradox tells us anything then perhaps it is a warning not to use English (or any other language for that matter) when we are doing mathematics.

Emile Borel introduced the concept of a normal real number in 1909. His idea was to provide a test as to whether the digits in a real number occurred in the sort of way they would if we chose each one at random. First assume that we have a real number written in base 10, that is a decimal expansion. Then if it is a "random" number the digit 1 should occur about 1/10 of the time so, if we denote by N(1,n) the number of times 1 occurs in the first n decimal digits, then N(1,n)/n should tend to 1/10 as n tends to infinity. Similarly for the all digits i in the set {0, 1, 2, ..., 9) we should have N(i,n)/n tending to 1/10 as n tends to infinity. But a specific 2-digit number, say 47, should occur among all two digit blocks about 1/100 of the time etc. Borel called a number normal (in base 10) if every k-digit number occurred among all the k digit blocks about 1/10k of the time. He called a number absolutely normal if it was normal in every base b.

Now Borel was able to prove that, in one sense, almost every real number was normal. His proof of this involved showing that the non-normal numbers formed a subset of the reals of measure zero. There were still an uncountable number of non-normal numbers, however, which was easily seen by taking the subset of all real numbers with no digit equal to 1. These are uncountable as can be seen using Cantor's diagonal argument, but clearly they are all non-normal. Clearly no rational is normal since eventually it ends in a repeating pattern. However despite proving these facts, Borel couldn't show that any specific number was absolutely normal. This was achieved first by Sierpinski in 1917.

In his list of problems which he proposed to the International Congress of Mathematicians at Paris in August 1900, Hilbert stated that one of the most pressing issues for the foundations of mathematics was a proof of the consistency of arithmetic. He attempted to solve this himself but was unsuccessful. It was one thing trying to prove arithmetic consistent, but it was known that set theory led to paradoxes. These paradoxes worried many mathematicians and they felt that the foundations of mathematics needed to be built on a logical foundation which did not contain inherent contradictions. Three major paradoxes were due toBurali-Forti in 1897, Russell in 1902, and Richard in 1905. The first of these was derived from the fact that the ordinal numbers themselves formed an ordered set whose order type had to be an ordinal number. Russell's paradox is the well-known one relating to the set of all sets which do not contain themselves as an element, and Richard's paradox we have explained above. Solutions proposed by some mathematicians would only allow mathematics to treat objects which could be constructed. Poincaré (1908) and Weyl (1918) complained that analysis had to be based on a concept of the real numbers which eliminated the non-constructive features. Weyl argued in this 1918 work that analysis should be built on a countable continuum. It was the uncountable, and so non-constructible, aspects of the real line which Weyl felt caused problems.

Gödel proved some striking theorem in 1930. He showed that a formal theory which includes the arithmetic of the natural numbers had to lead to statements which could neither be proved nor disproved within the theory. In particular the consistency of arithmetic was unprovable unless one used a higher order system in which to create the proof, the consistency of this system being equally unprovable. In 1936 Gentzen proved arithmetic consistent, but only by using transfinite methods which were less accepted than arithmetic itself. Although this topic of research is still an active one, most mathematicians accept the uncountable world of Cantor and the non-constructive system of real numbers.

In 1933 David Champernowne, who was an undergraduate at Cambridge University and a friend of Alan Turing, devised Champernowne's number. Write the numbers 1, 2, 3, ..., 9, 10, 11, ... in turn to form the decimal expansion of a number

0.12345678910111213141516171819202122232425262728293031323334353637383940 ...

In The Construction of Decimals Normal in the Scale of Ten published in the Journal of the London Mathematical Society in 1933, Champernowne proved that his number was normal in base 10. In 1937 Mahler proved that Champernowne's number was transcendental. In fact he proved the much stronger result that if p(x) is a polynomial with integer coefficients then the real number obtained by concatenating the integers p(1), p(2), p(3), ... to get

0.p(1)p(2)p(3)...

is transcendental. Champernowne's number is the special case where p(x) = x. In 1946 Copeland and Erdős proved that the number

0.2357111317192329313741434753596167717379838997101103107109113127131137139 ...

obtained in a similar way to Champernowne's number, but using primes instead of all positive integers, was normal. Neither Champernowne's number nor the Copeland and Erdős number is absolutely normal.

It is reasonable to ask whether π, √2, e etc are normal. The answer is that despite "knowing" that such numbers must be absolutely normal, no proof of this has yet been found. In fact although no irrational algebraic number has yet been proved to be absolutely normal nevertheless it was conjectured in 2001 that this is the case.

In 1927 Borel came up with his "know-it-all" number. We illustrate this by again using our number c. Since c contains every English sentence, it contains every possible true/false question that can be asked in English. Create a real number k as follows. If the n-th block of c translates into a true/false question then set the n-th digit of k to be 1 if the answer to the question is true, and 2 if the answer is false. If the n-th block of c does not translates into a true/false question then set the n-th digit of k to be 3. Then k answers very possible question that has ever been asked, or ever will be asked, in English. Borel describes k as an unnatural real number, or an "unreal" real. Borel devotes a whole book [1], which he published in 1952, to discuss another idea, namely that of an "inaccessible number".

An accessible number, to Borel, is a number which can be described as a mathematical object. The problem is that we can only use some finite process to describe a real number so only such numbers are accessible. We can describe rationals easily enough, for example either as, say, one-seventh or by specifying the repeating decimal expansion 142857. Hence rationals are accessible. We can specify Liouville's transcendental number easily enough as having a 1 in place n! and 0 elsewhere. Provided we have some finite way of specifying the n-th term in a Cauchy sequence of rationals we have a finite description of the resulting real number. However, as Borel pointed out, there are a countable number of such descriptions. Hence, as Chaitin writes in [6]:-

Pick a real at random, and the probability is zero that it's accessible - the probability is zero that it will ever be accessible to us as an individual mathematical object.

In 1936 Turing published a paper called On computable numbers. Rather than look at the real numbers which can be described in English, Turing looked at a very precise description of a number, namely one which can be output digit by digit by a computer. He then took Richard's paradox and ran through it again, this time with computable numbers. Clearly computer programs, being composed from a finite number of symbols, are countable. Hence computable numbers are countable. List all computer programs -- in fact they will all occur in the number c above. Create a new real number t by Cantor's diagonal argument whose n-th digit is defined as follows. If the n-th block is a program which outputs a real number, make the n-th digit of t different from the n-th digit of the computable number which is output. If the n-th block is not a valid programme to output a real number, then make the n-th digit of t equal to 1. Now t cannot be computable since, by construction, it differs from each computable number in at least one digit. However, we have just given a recipe to produce the number which could easily be programmed to output t, so t is computable.

Although the "English descriptions" of Richard's paradox must hold the key to the paradox, in this case our "computable numbers" are very precise and not subject to the same difficulties. Do we really have the ultimate paradox which shows that the real numbers are inconsistent? No! So where is the error in our paradox? The error lies in the fact that when we run the computer programmes we do not know whether they will ever output an n-th digit. We can deduce from this argument that it is impossible to tell whether a computer programme which has output k digits will ever output a k+1-st digit.

______________________________________________________________________________________________

Books:

Papers:

|

|

|

|

"عادة ليلية" قد تكون المفتاح للوقاية من الخرف

|

|

|

|

|

|

|

ممتص الصدمات: طريقة عمله وأهميته وأبرز علامات تلفه

|

|

|

|

|

|

|

قسم التربية والتعليم يكرّم الطلبة الأوائل في المراحل المنتهية

|

|

|