CONTROLLABILITY, BANG-BANG PRINCIPLE-DEFINITIONS

المؤلف:

Lawrence C. Evans

المؤلف:

Lawrence C. Evans

المصدر:

An Introduction to Mathematical Optimal Control Theory

المصدر:

An Introduction to Mathematical Optimal Control Theory

الجزء والصفحة:

15-16

الجزء والصفحة:

15-16

3-10-2016

3-10-2016

1371

1371

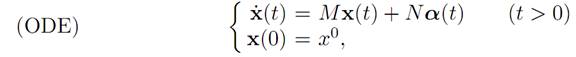

We firstly recall from Chapter 1 the basic form of our controlled ODE:

Here x0 ∈ Rn, f : Rn ×A → Rn, α : [0,∞) → A is the control, and x : [0,∞) → Rn is the response of the system.

This chapter addresses the following basic

CONTROLLABILITY QUESTION: Given the initial point x0 and a “target” set S ⊂ Rn, does there exist a control steering the system to S in finite time?

For the time being we will therefore not introduce any payoff criterion that would characterize an “optimal” control, but instead will focus on the question as to whether or not there exist controls that steer the system to a given goal. In this chapter we will mostly consider the problem of driving the system to the origin S = {0}.

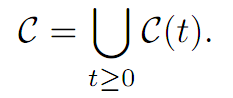

DEFINITION. We define the reachable set for time t to be C(t) = set of initial points x0 for which there exists a control such that x(t) = 0, and the overall reachable set

C = set of initial points x0 for which there exists a control such that x(t) = 0 for some finite time t.

Note that

Hereafter, let Mn×m denote the set of all n × m matrices. We assume for the rest of this and the next chapter that our ODE is linear in both the state x(.) and the control α(.), and consequently has the form

where M ∈ Mn×n and N ∈ Mn×m. We assume the set A of control parameters is a cube in Rm:

A = [−1, 1]

m = {a ∈ Rm | |ai| ≤ 1, i = 1, . . . ,m}.

References

[B-CD] M. Bardi and I. Capuzzo-Dolcetta, Optimal Control and Viscosity Solutions of Hamilton-Jacobi-Bellman Equations, Birkhauser, 1997.

[B-J] N. Barron and R. Jensen, The Pontryagin maximum principle from dynamic programming and viscosity solutions to first-order partial differential equations, Transactions AMS 298 (1986), 635–641.

[C1] F. Clarke, Optimization and Nonsmooth Analysis, Wiley-Interscience, 1983.

[C2] F. Clarke, Methods of Dynamic and Nonsmooth Optimization, CBMS-NSF Regional Conference Series in Applied Mathematics, SIAM, 1989.

[Cr] B. D. Craven, Control and Optimization, Chapman & Hall, 1995.

[E] L. C. Evans, An Introduction to Stochastic Differential Equations, lecture notes avail-able at http://math.berkeley.edu/˜ evans/SDE.course.pdf.

[F-R] W. Fleming and R. Rishel, Deterministic and Stochastic Optimal Control, Springer, 1975.

[F-S] W. Fleming and M. Soner, Controlled Markov Processes and Viscosity Solutions, Springer, 1993.

[H] L. Hocking, Optimal Control: An Introduction to the Theory with Applications, OxfordUniversity Press, 1991.

[I] R. Isaacs, Differential Games: A mathematical theory with applications to warfare and pursuit, control and optimization, Wiley, 1965 (reprinted by Dover in 1999).

[K] G. Knowles, An Introduction to Applied Optimal Control, Academic Press, 1981.

[Kr] N. V. Krylov, Controlled Diffusion Processes, Springer, 1980.

[L-M] E. B. Lee and L. Markus, Foundations of Optimal Control Theory, Wiley, 1967.

[L] J. Lewin, Differential Games: Theory and methods for solving game problems with singular surfaces, Springer, 1994.

[M-S] J. Macki and A. Strauss, Introduction to Optimal Control Theory, Springer, 1982.

[O] B. K. Oksendal, Stochastic Differential Equations: An Introduction with Applications, 4th ed., Springer, 1995.

[O-W] G. Oster and E. O. Wilson, Caste and Ecology in Social Insects, Princeton UniversityPress.

[P-B-G-M] L. S. Pontryagin, V. G. Boltyanski, R. S. Gamkrelidze and E. F. Mishchenko, The Mathematical Theory of Optimal Processes, Interscience, 1962.

[T] William J. Terrell, Some fundamental control theory I: Controllability, observability, and duality, American Math Monthly 106 (1999), 705–719.

الاكثر قراءة في نظرية التحكم

الاكثر قراءة في نظرية التحكم

اخر الاخبار

اخر الاخبار

اخبار العتبة العباسية المقدسة